Chiptune refers to a collection of related music production and performance practices sharing a history with video game soundtracks. The evolution of early chiptune music tells an alternate narrative about the hardware, software, and social practices of personal computing in the 1980s and 1990s. By digging into the interviews, text files, and dispersed ephemera that have made their way to the Web, we identify some of the common folk-historical threads among the commercial, noncommercial, and ambiguously commercial producers of chiptunes with an eye toward the present-day confusion surrounding the term chiptune.

In its strictest use, the term chiptunes refers to music composed for the microchip-based audio hardware of early home computers and gaming consoles. The best of these chips exposed a sophisticated polyphonic synthesizer to composers who were willing to learn to program them. By experimenting with the chips' oscillating voices and noise generator, chiptunes artists in the 1980s—many of them creating music for video games—developed a rich palette of sounds with which to emulate popular styles like heavy metal, techno, ragtime, and (for lack of a better term) Western classical. Born out of technical limitation, their soaring flutelike melodies, buzzing square wave bass, rapid arpeggios, and noisy gated percussion eventually came to define a style of its own, which is being called forth by today's pop composers as a matter of preference rather than necessity.

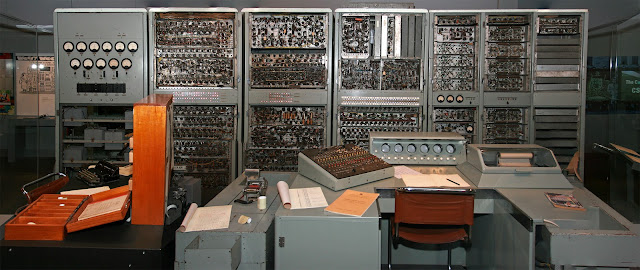

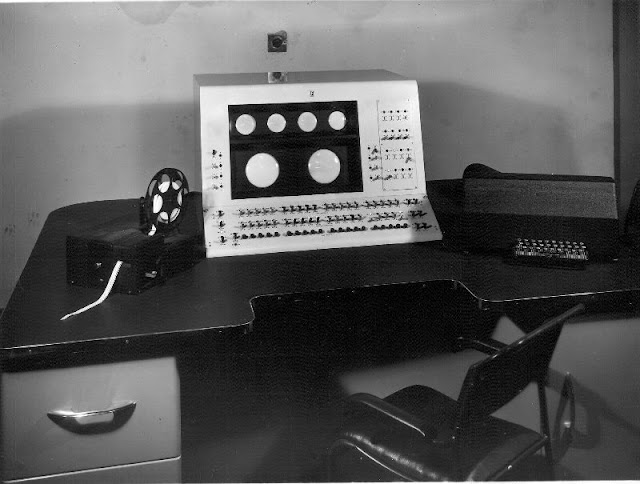

The earliest precursors to chip music can be found in the early history of computer music. In 1951, the computers CSIRAC and Ferranti Mark 1 were used to perform real-time synthesized digital music in public. One of the earliest commercial computer music albums came from the First Philadelphia Computer Music Festival, held August 25, 1978, as part of the Personal Computing '78 show. The First Philadelphia Computer Music Festival recordings were published by Creative Computing in 1979. The Global TV program Science International (1976-9) credited a PDP-11/10 for the music.

|

| CSIRAC Computer |

|

| Ferranti Mark 1 Computer |

Before the appearance of microcomputers at the end of the 1970s, digital arcade games provided the primary computing experience for people outside of financial data centers, university labs, and military research facilities. Installed in loud public spaces like bars and roller-skating rinks, the experience of playing these games was likely accompanied by the sound of a nearby radio, DJ, or jukebox playing the latest disco and progressive rock. In the early 1980s, computer gaming followed computers into the privacy of the home. The sound produced by arcade cabinets might have competed with other environmental noises, but many of the earliest home computer games included only a brief theme, a few sound effects, or no sound at all. The general-purpose home platforms were not as well suited to audio reproduction as the custom-built arcade cabinets. Nevertheless, during the first few years of the 1980s, the number of platforms diversified, and each new design provided a different set of affordances for the growing number of computer music composers to explore.

The Apple II home computer, released in 1977, included a single speaker inside of its case that could be programmed to play simple musical phrases or sound effects (Weyhrich 2008). In-game music was very rare as memory storage for audio data was limited and audio playback was costly in terms of the central processing unit (CPU) cycles (note 1). The Atari VCS game console, released the same year as the Apple II, was designed to be attached to a television. Its television interface adapter (TIA) controlled both the audio and video output signal.

|

| Steve Jobs demonstrating the Apple II |

|

| Atari 2600 VCS |

Although the TIA could produce two voices simultaneously, it was notoriously difficult to tune (Slocum 2003). Rather than include multivoice harmonic passages for a machine with unpredictable playback capacity, games such as Atari's Missile Command implemented rhythmic themes using controlled bursts of noise for percussion instruments (Fulop 1981). Programmers charged with interpreting recognizable musical themes from arcade games, films, or pop groups were less free to experiment. Data Age's Journey Escape (1982), billed as "the first rock video game," struggled against the tonal limitations of the TIA in its squeaky interpretation of Journey's hit song "Don't Stop Believing," while Atari's E.T.: The Extra-Terrestrial (1982) presented a harmonically accurate re-creation of the original theme.

Example of Missle Command gameplay and sounds (Atari 1981)

Theme from journey escape (Data Age 1982)

Theme from Atari's E.T.: The Extra-Terrestrial (Atari 1982).

Continuous music was, if not fully introduced, then arguably foreshadowed as one of the prominent features of future video games as early as 1978, when sound was used to keep a regular beat in a few popular games. Space Invaders (Midway, 1978) set an important precedent for continuous music with a descending four tone loop of marching alien feet that sped up as the game progressed. Arguably Space invaders and Asteroids (Atari 1979, with a two-note “melody”) represent the first examples of continuous m music in games, depending on how one defines music. Music was slow to develop because it was difficult and time consuming to program on the early machines as Nintendo composer Hirokazu “Hip” Tanaka explains: “Most music and sound in the arcade era (Donkey Kong and Mario Brothers) was designed little by little, by combining transistors, condensers and resistance. And sometimes music and sound were even created directly into the CPU port by writing 1s and 0s, and outputting the wave that becomes sound at the end. In the era when ROM capacities were only 1k or 2k, you had to create all the tools by yourself. The switches that manifest dresses and data were placed side by side, so you have to write something like ‘1, 0, 0, 0, 1’ literally by hand. A combination of the arcade’s environment and the difficulty producing sounds led to the primacy of sound effects over the music in this early stage of game audio’s history.

Donkey Kong sounds and gameplay (Nintendo 1981)

By 1980, arcade manufacturers included dedicated sound chips known as programmable sound generators or PSGs into their circuit boards, and more tonal background music and elaborate sound effects developed. Some of the earliest examples of repeating musical loops in games were found in Rally X (Namco/Midway, 1980) which had a six bar loop (one bar repeated four times, followed by the same melody transposed to a lower pitch), and Carnival (Sega 1980, which used Juventino Rosas’ “Over the Waves” (waltz of ca 1889). Although Rally X relied on sampled sound using a digital to analog converter, Carnival used the most popular of early PSG sound chips, the General Instruments AY-3-8910. As with most PSG sound chips, the AY series was capable of playing three simultaneous square-wave tones, as well as white noise. Although many early sound chips had this four channel functionality, the range of notes available varied considerably from chip to chip, set by what was known as a tone register of frequency divider. In this case the register was 12-bit, meaning it would allow for 4,096 notes. The instrument sound was set by an envelope generator, manipulating the attack, decay sustain and release (ADSR) of a sound wave, by adjusting the ADSR, a sound amplitude and filter cutoff could be set.

|

| AY-3-8910 Sound Chip |

Gameplay and music from Carnival (Sega 1980)

Despite (or perhaps because of) the challenges presented, some developers embraced the limitations of these early home computing platforms. In preparation for the development of Activision's Pressure Cooker in 1983, Garry Kitchen determined a set of pitches that the Atari TIA could reliably reproduce. He then hired a professional jingle writer to compose theme music using only the available pitches. The resulting song is heard playing in two-part harmony on both TIA audio channels during the title screen. Pressure Cooker further challenged the audio conventions of the Atari VCS by including a nonstop soundtrack during game play. One of the TIA's voices repeats a simple, two-bar bass line, while the other is free to produce sound effects in response to in-game events.

Title screen and game play from Pressure Cooker (Activision 1983).

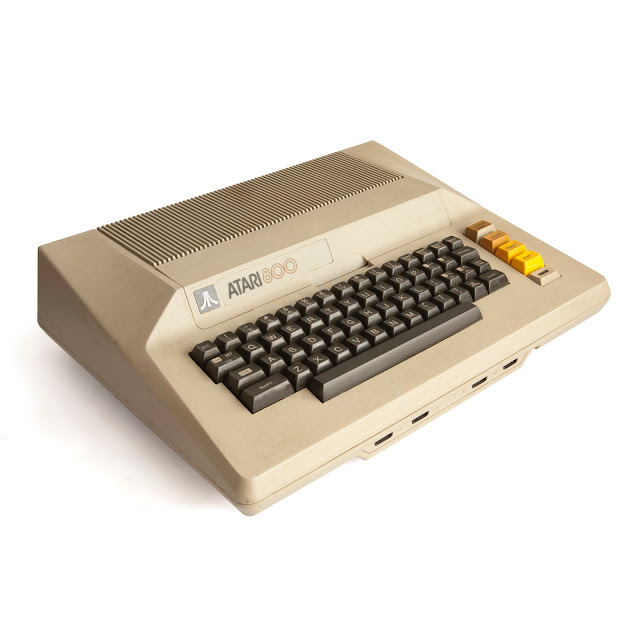

The Atari 8-bit family is a series of 8-bit home computers introduced by Atari, Inc. in 1979 and manufactured until 1992. All are based on the MOS Technology 6502 CPU running at 1.79 MHz, roughly twice that of similar designs, and were the first home computers designed with custom co-processor chips. This architecture allowed the Atari designs to offer graphics and sound capabilities that were more advanced than contemporary machines like the Apple II or Commodore PET, and gaming on the platform was a major draw; Star Raiders is widely considered the platform's killer app. Another computer with custom graphics hardware and similar performance would not appear until the Commodore 64 in 1982.

|

| Atari 800 8 bit Computer |

The Pot Keyboard Integrated Circuit (POKEY) is a digital I/O chip found in the Atari 8-bit family of home computers and many arcade games in the 1980s. It was commonly used to sample (ADC) potentiometers (such as game paddles) and scan matrices of switches (such as a computer keyboard). POKEY is also well known for its sound effect and music generation capabilities, producing a distinctive square wave sound popular among chip tune aficionados. The LSI chip has 40 pins and is identified as C012294. POKEY was designed by Atari employee Doug Neubauer, who also programmed the original Star Raiders.

Some of Atari's arcade systems use multi-core versions with 2 or 4 POKEY chips in a single package for more sound voices. The Atari 7800 allows a game cartridge to contain a POKEY, providing better sound than the system's audio chip. Only two games make use of this: the ports of Ballblazer and Commando.

This awesome 8 bit composition shows the power behind the POKEY sound chip.

Compilation of demos showing the Atari's 8 bit computers audio and video capabilities

No longer manufactured, POKEY is now emulated in software by classic arcade and Atari 8-bit emulators and with the SAP player.

In 1980, most home computer music remained limited to single-voice melodies and lacked dynamic range. Robert "Bob" Yannes, a self-described "electronic music hobbyist," saw the sound hardware in first-generation microcomputers as "primitive" and suggested that they had been "designed by people who knew nothing about music" (Yannes 1996). In 1981, he began to design a new audio chip for MOS Technology called the SID (Sound Interface Device). In contrast to the kludgy Atari TIA, Yannes intended the SID to be as useful in professional synthesizers as it would be in microcomputers. Later that year, Commodore decided to include MOS Technology's new SID alongside a dedicated graphics chip in its next microcomputer, the Commodore 64. Unlike the Atari architecture, in which a single piece of hardware controlled both audio and video output, the Commodore machine afforded programmers greater flexibility in their implementation of graphics and sound.

|

| SID (Sound Interface Device) 6581 Chip used in C64 computers |

Technically, the SID enables a broad sonic palette at a low cost to the attendant CPU by implementing common synthesizer features in hardware. The chip consists of three oscillators, each capable of producing four different waveforms—square, triangle, sawtooth, and noise (note 3). The output of each oscillator is then passed through an envelope generator to vary the timbre of the sound from short plucks to long, droning notes. A variety of modulation effects may be applied to the sounds by the use of a set of programmable filters to create, for example, the ringing sounds of bells or chimes.

Commodore C64 Sid Collection

Several peripherals and cartridges were developed to take advantage of the music-making possibilities of the Commodore 64's SID chip, but even the best of these products could not match the flexibility and freedom of working with the chip's features directly by writing programs in 6502 assembly language (Pickens and Clark 2001). Of course, although the SID's implementation of sound synthesis would be familiar to electronic musicians of the time, programming in assembly was a very different experience from turning the knobs and sliding the faders of a comparable commercial synthesizer like the Roland Juno-6. Early Commodore 64 composers had to write not only the music, but also the software to play it back.

In the mid-1980s, chiptunes and computer game music appeared largely indistinguishable. The game music was not distinct from the rest of pop music, however: the songs reflected the musical interests of their composers. Most of the composers discussed here were young men living in Europe and the United States, and the influence of heavy metal, electro, New Wave pop, and progressive rock were prevalent throughout the 1980s. By assigning a distinct timbre to each of the voices, the SID could emulate the conventional instrumentation of a four-piece rock band: drums, guitar, bass, and voice (Collins 2006). For example, Martin Galway's 11-minute title track for Origin System's Times of Lore (1988) reflects the influence of classical guitar in heavy metal. Like the opening section of Metallica's "Fade to Black" from 1984, Times of Lore begins with an arpeggiated chord progression played on one voice with a harmonized "guitar solo" layered on top using a second voice.

Theme from Origin System's Times of Lore (1988); music composed by Martin Galway.

The NES was introduced in Europe in 1986 but never achieved the success it found in the United States (Nintendo 2008). As the decade came to a close, European gamers appear to have favored programmable home computers like the Atari ST, Amiga, and IBM PC-compatible machines to the closed game consoles like the NES, Game Boy, and Sega Genesis. This divide in platform preferences explains why, in comments made in 2002, composer Rob Hubbard recalled "[missing] out on a lot of [chiptune] developments" by moving to the United States in 1987 (Hubbard 2002).

NES Music Compilation

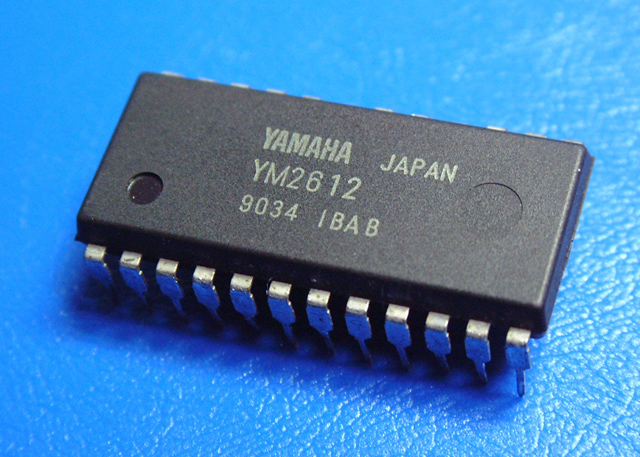

A major advance for chip music was the introduction of frequency modulation synthesis (FM synthesis), first commercially released by Yamaha for their digital synthesizers and FM sound chips, which began appearing in arcade machines from the early 1980s.Arcade game composers utilizing FM synthesis at the time included Konami's Miki Higashino (Gradius, Yie-Ar Kung Fu, Teenage Mutant Ninja Turtles) and Sega's Hiroshi Kawaguchi (Space Harrier, Hang-On, Out Run).

|

| Yamaha YM2612 Chip found in the popular Sega Genesis 16 Bit console |

By the early 1980s, significant improvements to personal computer game music were made possible with the introduction of digital FM synthesis sound. Yamaha began manufacturing FM synth boards for Japanese computers such as the NEC PC-8801 and PC-9801 in the early 1980s, and by the mid-1980s, the PC-8801 and FM-7 had built-in FM sound. This allowed computer game music to have greater complexity than the simplistic beeps from internal speakers. These FM synth boards produced a "warm and pleasant sound" that musicians such as Yuzo Koshiro and Takeshi Abo utilized to produce music that is still highly regarded within the chiptune community. In the early 1980s, Japanese personal computers such as the NEC PC-88 and PC-98 featured audio programming languages such as Music Macro Language (MML) and MIDI interfaces, which were most often used to produce video game music. Fujitsu also released the FM Sound Editor software for the FM-7 in 1985, providing users with a user-friendly interface to create and edit synthesized music.

The widespread adoption of FM synthesis by consoles would later be one of the major advances of the 16-bit era, by which time 16-bit arcade machines were using multiple FM synthesis chips. A major chiptune composer during this period was Yuzo Koshiro. Despite later advances in audio technology, he would continue to use older PC-8801 hardware to produce chiptune soundtracks for series such as Streets of Rage (1991–1994) and Etrian Odyssey (2007–present). His soundtrack to The Revenge of Shinobi (1989) featured house and progressive techno compositions that fused electronic dance music with traditional Japanese music. The soundtrack for Streets of Rage 2 (1992) is considered "revolutionary" and "ahead of its time" for its "blend of swaggering house synths, dirty electro-funk and trancey electronic textures that would feel as comfortable in a nightclub as a video game." For the soundtrack to Streets of Rage 3 (1994), Koshiro created a new composition method called the "Automated Composing System" to produce "fast-beat techno like jungle," resulting in innovative and experimental sounds generated automatically. Koshiro also composed chiptune soundtracks for series such as Dragon Slayer, Ys, Shinobi, and ActRaiser. Another important FM synth composer was the late Ryu Umemoto, who composed chiptune soundtracks for various visual novel and shoot 'em up games.

Streets of Rage Sound Track (Sega 1991)

The Amiga is a family of personal computers sold by Commodore in the 1980s and 1990s. Based on the Motorola 68000 family of microprocessors, the machine had a custom chipset with graphics and sound capabilities that were unprecedented for the price, and a pre-emptive multitasking operating system called AmigaOS. The Amiga provided a significant upgrade from earlier 8-bit home computers, including Commodore's own C64.

The sound chip, named Paula, supports four PCM-sample-based sound channels (two for the left speaker and two for the right) with 8-bit resolution for each channel and a 6-bit volume control per channel. The analog output is connected to a low-pass filter, which filters out high-frequency aliases when the Amiga is using a lower sampling rate (see Nyquist frequency). The brightness of the Amiga's power LED is used to indicate the status of the Amiga's low-pass filter. The filter is active when the LED is at normal brightness, and deactivated when dimmed (or off on older A500 Amigas). On Amiga 1000 (and first Amiga 500 and Amiga 2000 model), the power LED had no relation to the filter's status, and a wire needed to be manually soldered between pins on the sound chip to disable the filter. Paula can read directly from the system's RAM, using direct memory access (DMA), making sound playback without CPU intervention possible.

|

| Paula is primarily the sound chip in the Amiga, capable of playing 4 channel 8bit stereo sound, however it is also the floppy drive controller. |

Although the hardware is limited to four separate sound channels, software such as OctaMED uses software mixing to allow eight or more virtual channels, and it was possible for software to mix two hardware channels to achieve a single 14-bit resolution channel by playing with the volumes of the channels in such a way that one of the source channels contributes the most significant bits and the other the least.

The quality of the Amiga's sound output, and the fact that the hardware is ubiquitous and easily addressed by software, were standout features of Amiga hardware unavailable on PC platforms for years. Third-party sound cards exist that provide DSP functions, multi-track direct-to-disk recording, multiple hardware sound channels and 16-bit and beyond resolutions. A retargetable sound API called AHI was developed allowing these cards to be used transparently by the OS and software.

Compilation of Amiga demos that were created by computer enthusiasts in Europe especially the nordic countries such as Finland, Denmark, Scandinavia and also Germany to certain extent, these demos were created to show the sound and video capabilities of the Amiga computers (I will talk more about the Demo Scene in the second part of these series of posts)

The Super Nintendo Entertainment System (officially abbreviated the Super NES or SNES, and commonly shortened to Super Nintendo) is a 16-bit home video game console developed by Nintendo that was released in 1990 in Japan and South Korea, 1991 in North America, 1992 in Europe and Australasia (Oceania), and 1993 in South America.

The SNES is Nintendo's second home console, following the Nintendo Entertainment System (NES). The console introduced advanced graphics and sound capabilities compared with other consoles at the time. Additionally, development of a variety of enhancement chips (which were integrated on game circuit boards) helped to keep it competitive in the marketplace.

Super NES Music was considered legendary, here's why

Behind the SuperNES impressive sound capabilities we have the S-SMP audio processing unit, The sound chip used in the SNES is the Sony SPC700 which consists of an 8-bit SPC700, a 16-bit DSP, 64 kB of SRAM shared by the two chips, and a 64 byte boot ROM. The audio subsystem is almost completely independent from the rest of the system: it is clocked at a nominal 24.576 MHz in both NTSC and PAL systems, and can only communicate with the CPU via 4 registers on Bus B. It was designed by Ken Kutaragi and was manufactured by Sony.

|

| Nintendo S-SMP Audio processing unit |

It is located on the left side of the sound module. It shares 64 KB of PSRAM with the S-DSP (which actually generates the sound) and runs at 2.048 MHz, divided by 12 off of the 24.576 MHz crystal. It has six internal registers, and can execute 256 opcodes. The SPC700 instruction set is quite similar to that of the 6502 microprocessor family, but includes additional instructions, including XCN (eXChange Nibble), which swaps the upper and lower 4-bit portions of the 8-bit accumulator, and an 8-by-8-to-16-bit multiply instruction.

Other applications of the SPC700 range from sound chip to the CXP82832/82840/82852/82860 microcontroller series. The Proson A/V receiver 2300 DTS uses an CXP82860 microcontroller that utilizes the SPC 700 core.

The S-DSP is capable of producing and mixing 8 simultaneous voices at any relevant pitch and volume in 16-bit stereo at a sample rate of 32 kHz. It has support for voice panning, ADSR envelope control, echo with filtering (via a programmable 8-tap FIR), and using noise as sound source (useful for certain sound effects such as wind). S-DSP sound samples are stored in RAM in compressed (BRR) format. Communications between the S-SMP and the S-DSP are carried out via memory-mapped I/O.

The RAM is accessed at 3.072 MHz, with accesses multiplexed between the S-SMP (1⁄3) and the DSP (2⁄3). This RAM is used to store the S-SMP code and stack, the audio samples and pointer table, and the DSP's echo buffer.

The S-SMP operates in a somewhat unconventional manner for a sound chip. A boot ROM is running on the S-SMP upon power-up or reset, and the main SNES CPU uses it to transfer code blocks and sound samples to the RAM. The code is machine code developed specifically for the SPC700 instruction set in much the same way that programs are written for the CPU; as such, the S-SMP can be considered as a coprocessor dedicated for sound on the SNES.

Since the module is mostly self-contained, the state of the APU can be saved as an .SPC file, and can be emulated in a stand-alone manner to play back all game music (except for a few games that constantly stream their samples from ROM). Custom cartridges or PC interfaces can be used to load .SPC files onto a real SNES SPC700 and DSP. The sound format name .SPC comes from the name of the audio chip core.

Bibliography:

Diaz & Driscoll. "Endless loop: A brief history of chiptunes". Transformative Works and Cultures. Retrieved July 6 2016.

http://journal.transformativeworks.org/index.php/twc/article/view/96/94

Collins, Karen (2008). Game sound: an introduction to the history, theory, and practice of video game music and sound design. MIT Press. p. 12. ISBN 0-262-03378-X. Retrieved July 6 2016

"History | Corporate". Nintendo. Retrieved February 24, 2013.

http://www.nintendo.co.uk/Corporate/Nintendo-History/Nintendo-History-625945.html

"Anomie's S-DSP Doc" (text). Romhacking.net. Retrieved July 6 2016

"Anomie's SPC700 Doc" (text). Romhacking.net. Retrieved July 6 2016

"CXP82832/82840/82852/82860 CMOS 8-bit Single Chip Microcomputer" (PDF). 090423 datasheetcatalog.org Retrieved July 6 2016

"The Nintendo Years: 1990". June 25, 2007. p. 2. Archived from the original on August 20, 2012. Retrieved July 6 2016

Appendix O, "6581 Sound Interface Device (SID) Chip Specifications", of the Commodore 64 Programmer's Reference Guide (see the C64 article).

Retrieved July 6 2016

Bagnall, Brian. On The Edge: The Spectacular Rise and Fall of Commodore, pp. 231–238,370–371. ISBN 0-9738649-0-7.

Retrieved July 6 2016

Commodore 6581 Sound Interface Device (SID) datasheet. October, 1982. Retrieved July 6 2016

"Inside the Commodore 64". PCWorld. November 4, 2008. Retrieved July 6 2016.

"I. Theory of Operation". Atari Home Computer Field Service Manual - 400/800 (PDF). Atari, Inc. pp. 1–11. Retrieved July 6 2016

Michael Current, "What are the SALLY, ANTIC, CTIA/GTIA, POKEY, and FREDDIE chips?", Atari 8-Bit Computers: Frequently Asked Questions Retrieved July 6 2016

Hague, James (2002-06-01). "Interview with Doug Neubauer". Halcyon Days. Retrieved July 6 2016

The Atari 800 Personal Computer System, by the Atari Museum, accessed November 13, 2008

http://www.atarimuseum.com/computers/8BITS/400800/ATARI800/A800.html